GPU usage information for jobs in IBM Spectrum LSF

In my last blog, we ran through an example showing how IBM Spectrum LSF now automatically detects the presence of NVIDIA GPUs on hosts in the cluster and performs the necessary configuration of the scheduler automatically.

In this blog, we take a closer look at the integration between Spectrum LSF and NVIDIA DCGM which provides GPU usage information for jobs submitted to the system.

#IBMSpectrum #LSF supports #NVIDIA DCGM, allowing you to get the most our of your #GPUs https://t.co/aCo9cFHNkq #HPCmatters

— Gábor SAMU (@gabor_samu) December 7, 2016

To enable the integration between Spectrum LSF and NVIDIA DCGM, we need to specify the LSF_DCGM_PORT=<port number> parameter in LSF_ENVDIR/lsf.conf

root@kilenc:/etc/profile.d# cd $LSF_ENVDIR

root@kilenc:/opt/ibm/lsfsuite/lsf/conf# cat lsf.conf |grep -i DCGM

LSF_DCGM_PORT=5555You can find more details about the variable LSF_DCGM_PORT and what it enables here.

Before continuing, please ensure that the DCGM daemon is up and running. Below we start DCGM on the default port and run a query command to confirm that it’s up and running.

root@kilenc:/opt/ibm/lsfsuite/lsf/conf# nv-hostengine

Started host engine version 1.4.6 using port number: 5555

root@kilenc:/opt/ibm/lsfsuite/lsf/conf# dcgmi discovery -l

1 GPU found.

+--------+-------------------------------------------------------------------+

| GPU ID | Device Information |

+========+===================================================================+

| 0 | Name: Tesla V100-PCIE-32GB |

| | PCI Bus ID: 00000033:01:00.0 |

| | Device UUID: GPU-3622f703-248a-df97-297e-df1f4bcd325c |

+--------+-------------------------------------------------------------------+ Next, let’s submit a GPU job to IBM Spectrum LSF to demonstrate the collection of GPU accounting. Note that the GPU job must be submitted to Spectrum LSF with the exclusive mode specified in order for the resource usage to be collected. As was the case in my previous blog, we submit the gpu-burn test job (formally known as Multi-GPU CUDA stress test).

test@kilenc:~/gpu-burn$ bsub -gpu "num=1:mode=exclusive_process" ./gpu_burn 120

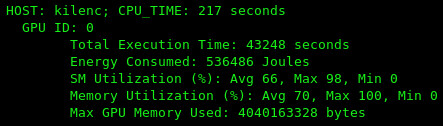

Job <54086> is submitted to default queue <normal>Job 54086 runs to successful completion and we use the Spectrum LSF bjobs command with the -gpu option to display the GPU usage information in the output below.

test@kilenc:~/gpu-burn$ bjobs -l -gpu 54086

Job <54086>, User <test>, Project <default>, Status <DONE>, Queue <normal>, Com

mand <./gpu_burn 120>, Share group charged </test>

Mon Oct 1 11:14:04: Submitted from host <kilenc>, CWD <$HOME/gpu-burn>, Reques

ted GPU <num=1:mode=exclusive_process>;

Mon Oct 1 11:14:05: Started 1 Task(s) on Host(s) <kilenc>, Allocated 1 Slot(s)

on Host(s) <kilenc>, Execution Home </home/test>, Executi

on CWD </home/test/gpu-burn>;

Mon Oct 1 11:16:08: Done successfully. The CPU time used is 153.0 seconds.

HOST: kilenc; CPU_TIME: 153 seconds

GPU ID: 0

Total Execution Time: 122 seconds

Energy Consumed: 25733 Joules

SM Utilization (%): Avg 99, Max 100, Min 64

Memory Utilization (%): Avg 28, Max 39, Min 9

Max GPU Memory Used: 30714888192 bytes

GPU Energy Consumed: 25733.000000 Joules

MEMORY USAGE:

MAX MEM: 219 Mbytes; AVG MEM: 208 Mbytes

SCHEDULING PARAMETERS:

r15s r1m r15m ut pg io ls it tmp swp mem

loadSched - - - - - - - - - - -

loadStop - - - - - - - - - - -

EXTERNAL MESSAGES:

MSG_ID FROM POST_TIME MESSAGE ATTACHMENT

0 test Oct 1 11:14 kilenc:gpus=0; N

RESOURCE REQUIREMENT DETAILS:

Combined: select[(ngpus>0) && (type == local)] order[gpu_maxfactor] rusage[ngp

us_physical=1.00]

Effective: select[((ngpus>0)) && (type == local)] order[gpu_maxfactor] rusage[

ngpus_physical=1.00]

GPU REQUIREMENT DETAILS:

Combined: num=1:mode=exclusive_process:mps=no:j_exclusive=yes

Effective: num=1:mode=exclusive_process:mps=no:j_exclusive=yes

GPU_ALLOCATION:

HOST TASK ID MODEL MTOTAL FACTOR MRSV SOCKET NVLINK

kilenc 0 0 TeslaV100_PC 31.7G 7.0 0M 8 - And to close, yours truly spoke at the HPC User Forum in April 2018 (Tucson, AZ) giving a short update in the vendor panel about Spectrum LSF, focusing on GPU support.